Cloud computing, AI, and the fight for digital independence

What does “sovereignty” mean in cloud computing? Why is it core to Hivenet’s mission? This article will answer these questions by exploring three trends: regulation, national security, and public sentiment. We will see a growing imperative for individuals, businesses, and states alike to take control of their technological destiny - and that Hivenet’s services are built from the ground up to help them achieve it.

Sovereignty as law: regulation and technology

Let’s start with a definition: while sovereignty [1] has a few meanings, its root is the Old French word “soverain” which means “highest; supreme; chief” [2]. In current times, sovereignty is often used in the sense of a “self-governing” state with authority over its affairs - and this usage of the word carries over into the concept of “digital sovereignty.” In 2021, the leaders of Estonia, Denmark, Finland, and Germany wrote to the President of the European Commission to emphasize the importance of “digital sovereignty” for the European community [3]. They sought to ensure that Europe could grow its technical capacity and retain its independence in the face of a market controlled by larger powers.

The EU went on to release regulations, such as the Digital Markets Act and AI Act, to complement existing ones like GDPR (General Data Protection Regulation) to address these concerns. As the World Economic Forum (WEF) noted earlier in 2025, we exist in a landscape of complex and fluid regulations as global powers strive to control and maximize the benefits of technological progress - where growing concern in the EU about the US and China’s dominance has driven a “proactive stance” on regulation that has been influential in other jurisdictions (the “Brussels effect”, e.g., The Brussels Effect and Artificial Intelligence) [4, 5].

We are not here to debate the pros and cons of specific policies - the fact is that regulations exist, and compliance with specific data, security, and operational controls is a challenge for businesses of all types and sizes. One solution favored by cloud hyperscalers is partnering with states and trusted local partners to achieve segregation between an underlying, “sovereign” infrastructure and the platforms and services that run on top (e.g., AWS European Sovereign Cloud, Microsoft Azure’s Cloud for Sovereignty, and Google Cloud’s Sovereign Cloud [6, 7, 8]). This approach is said to balance access to the features, power, and know-how of a cloud hyperscaler with “by default” adherence to necessary regulations.

However, this approach has a few key issues, as shown in Table 1.

Table 1: limitations of the “Sovereign Cloud” solution to data sovereignty

For evidence of the struggles of large technology companies to comply with key regulations, we only have to look at the history of major fines for GDPR-related breaches in recent times (e.g., [10, 11, 12). So far, we have discussed the regulatory aspects of cloud computing and highlighted that the “sovereign cloud” approach should be subject to skepticism. We will return to how Hivenet’s services address these concerns, but first, let’s consider another state-level dimension: the role of geopolitics in access to resources and the risk of state-backed cyber attacks.

Sovereignty as policy: geopolitics, cyberattacks and the cloud

Aside from policies designed to protect digital sovereignty, the last 5-10 years have been marked by increased geopolitical tensions that some characterize as a return to “mercantilism.” Mercantilism was an economic model common in Europe during the 16th to 18th centuries, which emphasized using a state’s economy to maximize its power at the expense of its ‘rivals’ [13]. Today’s “neo-mercantilism” includes US government responses to perceived trade aggression by China and its desire to “out-compete” through investment and strategic cooperation with “allies and partners” [14, 15]. The most recent example is efforts to control the flow of AI chip exports to favored nations (including the EU, UK, and others) to prevent supply to China [16]. As noted above, the major cloud providers are US-based - and increasingly aligned with US government policy - marking this as a clear risk for consistent access to cloud computing services.

Another factor is the growth in state-backed cyber attacks as a tool of foreign policy [e.g., 17]. While not a new phenomenon, the number of observed attacks is increasing, and the risk of data exfiltration, denial of service attacks, etc., is growing. A perceived benefit of cloud hyperscalers is that security is a strategic differentiator, which drives a higher level of investment and innovation than it is possible for end-users to keep data and workloads secure. However, as with regulation, there are risks - we’ve summarised the key ones in Table 2.

Table 2: limitations of public cloud security

While higher ideals like individual freedom and the right to privacy do shape attempts at policy (e.g., in the EU), national security concerns remain a dominant force in shaping who can leverage what technological capabilities (e.g. [21] and [22]), and motivating threat actors to target “flagship” infrastructure.

We have covered state-level concerns from two different perspectives; we will now move to the final theme - shifting public sentiment - before wrapping up with a review of how Hivenet’s distributed services mitigate the issues we’ve discussed.

Sovereignty as freedom: the influence of consumer sentiment

In the 2010s, as the first major wave of “digital transformations” and public cloud migrations was underway, a clear shift was taking place in IT strategy: decision-makers were bringing expectations from consumer-facing products into the workplace, asking why their systems did not work the same way … and whether maybe they should?

At this time, it was also becoming clear that consumer experience was driving enterprise adoption of products like Slack, Asana, and Google Drive - a process sometimes known as B2C2B sales (Business to Consumer to Business) and a big shift from long-standing “C-level and down” tactics [23, 24]. We can also look at how Microsoft leveraged an embrace of open-source and the acquisition of brands like LinkedIn and GitHub to convince formerly skeptical engineers that it had changed. Wider consumer understanding and acceptance of distributed services is relatively new but is growing as perceptions shift [25].

In contrast, we see a marked decline in public trust in large technology companies: their business practices, approach to privacy, and responsible stewardship of critical public platforms (e.g.[26]). Recent events like the “ban or sale” of TikTok (after a remarkable period of growth) and discomfort about the training of large language models with user data continue to drive interest in alternatives [27, 28]. Bluesky, Mastodon, PixelFed … there are various options, each with trade-offs, but there remains a growing desire for individual sovereignty. One 2022 report indicated a mere 28% (global average) as willing to trade personal data for free services, while 34% desired tighter regulation of the technology sector [29]. Similarly, another study investigated the influence of “Big Tech” on GenAI policy. It showed how declining public trust - and considerable public support for the regulation of GenAI - matched increasing lobbying spend by these companies [30]. Considering how consumer perceptions have recently become more influential on enterprise IT strategy, we can reasonably expect a similar impact for distributed computing services like Hivenet’s. With that in mind, we will now lay out how Hivenet’s platform empowers our users to reduce their exposure.

Sovereignty: at the heart of Hivenet’s services

So far, we’ve focused a lot on the problems that users and decision-makers face in today’s market for cloud infrastructure. With our deep-rooted belief in the possibilities of distributed computing, Hivenet’s services offer a way to mitigate or even eliminate these concerns.

Distributed systems are resilient systems

First, one of the key advantages of distributed systems is removing “authority,” or single points of control and failure. This has the following implications:

- The risk that a government order (e.g., under US CLOUD) will allow unwanted access to your data is reduced (or even removed, depending on the placement strategy).

- Even if a threat actor can access nodes in the network, their ability to access data is limited: encrypted data blocks are spread across multiple nodes, with redundancy built in, meaning it would not be possible to reassemble your data or hold it hostage.

- A distributed architecture is not dependent on monolithic data center “regions,” increasing your resilience to localized outages.

Distributed systems are flexible systems

Second, distributed systems provide a greater diversity of options, allowing you to respond faster to changing conditions:

- Services like Hivenet’s are “thin” compared to the “value-add” PaaS offerings of major providers. This reduces vendor lock-in, increases the choice of libraries and frameworks, and allows you to deploy the capabilities you need when and where you need them.

- Your architecture and code are within your control. This means that when regulatory requirements change, you can adapt without waiting on critical changes.

- Distributed architectures enable the adoption of agile sourcing strategies. For example, you could leverage spare GPU capacity held by small consumers (e.g., universities) without violating US government restrictions or access alternative GPUs (e.g., RTXs rather than A100s) not offered by hyperscalers.

Distributed systems are more open systems

A service like Hivenet is built on inherently more open concepts like “peer-to-peer,” where there is no hierarchy. We believe that distributed services are for everyone - anyone can contribute resources to our network and use them. This means:

- Users of a distributed system are insulated from concerns about the lobbying and intentions of large multinational corporations.

- Hivenet’s stack leverages mature, well-resourced, active open-source technologies like IPFS and LibP2P [31,32]. This offers greater transparency to our users, and as Hivenet is a research-led organization, we actively contribute to the knowledge pool.

- Contributing to the network minimizes unused capacity, meaning we can all benefit from more efficient, sustainable resources.

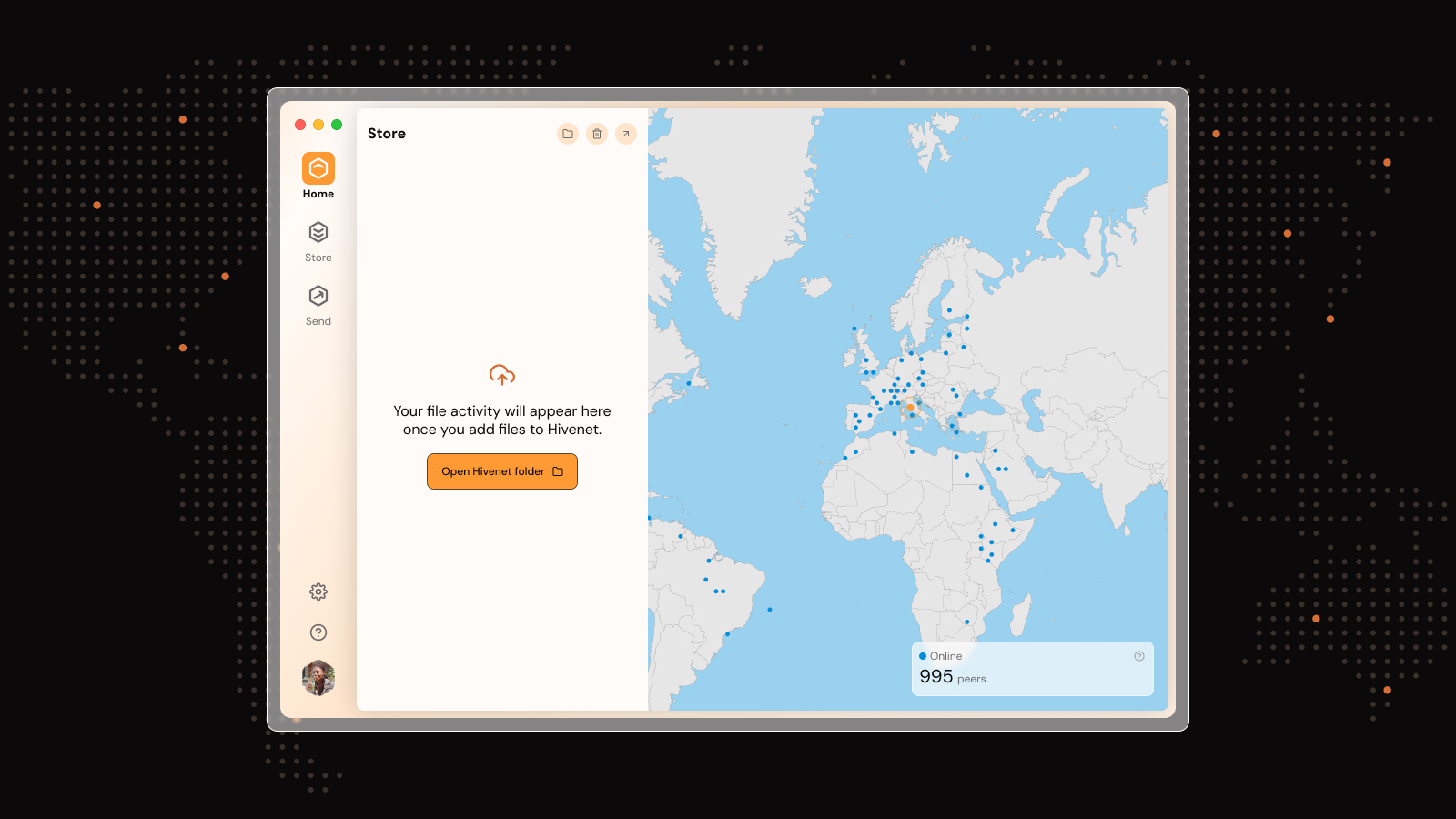

Hivenet is a distributed system

Since 2022, Hivenet’s distributed storage service has scaled to over 500,000 users and 320,000 contributors - with the recent addition of a file transfer service (similar to WeTransfer), photo backups, and more features to come. Earlier in 2025, you may have seen the announcement that Hivenet’s distributed compute service is officially open - leveraging the same secure, efficient Hivenet stack to deliver reliable, fast, and competitively priced infrastructure - and we’d like to emphasize some relevant points in the context of sovereignty [33, 34].

First, we have already discussed the chilling effect that government-led GPU restrictions can have - while developments like the recent DeepSeek R1 release are welcome, the fact remains that even well-performing, open-weight models require non-trivial compute resources to adapt to real-world challenges [35, 36]. Hivenet’s Compute is well-positioned to open up a globally diverse and powerful network of GPUs for businesses at any scale. We’ve also designed our service from the ground up with AI use cases in mind, with ready-baked templates for common ML frameworks to get you started (also: check out our tutorial on how to serve Llama 3.1-8B with Compute [37]).

Second, we are clear that distributed resources should not be associated with increased risk or reduced quality. Compute’s platform is built on hardware provided by certified partners, delivering Tier-3 data center equivalent standards, a guaranteed 99.9% uptime SLA, and fully dedicated hardware [38]. At a more granular level, rather than the A100s common with hyperscalers, Compute leverages “consumer-grade” RTX 4090 GPUs that can be more cost-efficient and better suited for many usage scenarios. For example, Figure 1 shows how RTX 4090s outperformed other options in relative throughput per dollar in LambdaLabs’s PyTorch benchmark [39]. With this level of quality at your foundation, you can feel comfortable and be enabled to implement whichever security, compliance, and operational tooling you require.

Figure 1: comparing various models of GPUs in LambdaLabs PyTorch GPU benchmark

In summary, Hivenet recognizes that the world is increasingly complex across many different dimensions - some within the control of our community and some that are not. Whether you have concerns about a fluid regulatory and compliance landscape, the implications of the geopolitical stance of major powers, or simply have had enough of a monopolized market for key technology platforms, Hivenet is committed to our mission of empowering you to attain digital sovereignty. This underpins our approach and everything we do daily - if you want to know more, we would love to hear from you today.

Liked this article? You'll love Hivenet

You scrolled this far. Might as well join us.

Secure, affordable, and sustainable cloud services—powered by people, not data centers.